One thing that should always be done when using 1Gb iSCSI is setting up Multipathing, and in all version of vSphere they have included NMP or Native Multi Pathing. With NMP comes a path selection method called Round Robin which as with anything else with that name means that IO is sent down each path, one at a time. By default, VMware has set a limit of 1000 IO’s to travel down each path before switching to the next path. However, by changing that default down to 1 IO before switching paths we can sometimes achieve much greater throughput because we can more effectively utilize our links.

There has been much debate over whether this is really worth it or not, so I thought why not try it out and see what effects it would have. The test environment that I used included the following hardware:

- Hp P4300 (Lefthand) SAN with 2 Storage Nodes

- Hp DL380 G7 Server with 2 – 6 core Intel 5675 CPUs (3.07Ghz ;)) and 72Gb of RAM

- Cisco 3560G switch

I setup the HP P4300 SAN with 802.3ad bonded links to the switch and turned on channel groups in passive mode on those links. As for the server, I loaded ESXi 5.0 and used two VMKernel interfaces with two NICs… I also did not use the software VMware software iSCSI initiator, but instead used the BroadCom iSCSI offload initiators because of the ease of setting them up in ESXi 5.0.

After mounting my LUN and letting it format VMFS5 I set the path selection policy to Round Robin. I then Installed a Windows 2008 R2 VM and followed the instructions from VMKtree.org for running IOmeter (just like I have for the other SAN tests).

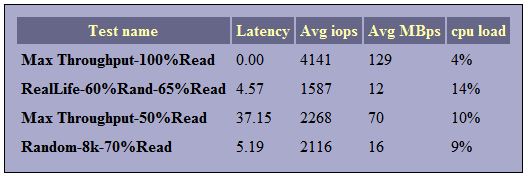

Here are the results with a standard ESXi 5.0 host using Round Robin with an IO limit of 1000 per path per turn. The Maximum read throughput is 129MBps, and the RealLife IOps are almost 1600.

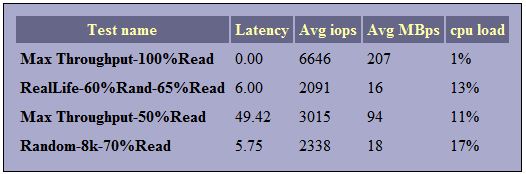

Now lets see what turning the IO limit down to 1 IO per path per turn gets us. Below you can see that we can now achieve 207MBps on Read throughput and our RealLife IOps is now at 2091 IOps. Plus every other number went up as well!

This is an increase of 60% on the Maximum Read throughput and a 30% increase on the number of IOps!

So how do you change the limit ?

Setting the limit to a ower value is described in the HP EVA best practices guide, but I could not get their command to work.

The command for ESXi5 is:

esxcli storage nmp psp roundrobin deviceconfig set -d naa.devicename --iops 1 --type iops

and to get the device name you could use:

esxcli storage nmp device list | grep naa.600

If you want to check out their guide it can be found here:

http://h20195.www2.hp.com/V2/GetPDF.aspx/4AA1-2185ENW.pdf

![]()

Pingback: Get-EsxCli de PowerCLI v5 supporte le “SSO” - Hypervisor.fr

Hi there,

Great article and loving the site! Really finding some useful info!

But i’d like to know what software etc you are using to bench test your solutions?…

Hi Justin,

great site some wonderful info in it.

I have a question for you about the IO tuning.

I know the value is vendor specific in relation to what the SAN it self can support but was wondering if you had any experience with the dell md3200i

I’ve had no luck with dell techs getting me a value for what it supports and am currently building a new 3 cluster enterprise plus environment with a raid 10 md3200i setup for exchange 2010

How many drives in the RAID 10 set ?

10 x 3TB drives in the RAID 10 set

If you have 10 x 3TB i will assume they are 7200 RPM drives, so figure about 80IOps per spindle

Total IOps in a perfect world would be about 800 IOps.

So the answer is “whatever fits into 800 IOps”. If you have fairly unused machines that might mean 20VM’s but if you have a single SQL server that is pretty active then that might be it.

thanks for that Justin.

it’s only going to contain about 13 VM’s for the first 2-3yrs

what i am struggling with is trying to get some good info on what size i should create the LUN or if i should go with multiple LUNs for esxi 5

i know the datastore limit is now 64tb but the vmdk limit is still 2tb

do you go with multiple Lun’s or just one big lun and create one big datastore?

Justin

Thnx for your great Blog! It helped me a lot!

Was this on 1Gbps links?

If it was, then you basically hit the capacity limit for the NIC when the IOPS were set to 1000.

In a multi-LUN environment this may not be an issue. Easy was to test would be to run 4 IOMeters, to 4 LUNs, and repeat the test.

The load should spread out over the two nics on its own, and then you won’t be hitting a capacity ceiling.

This configuration tweak got me 300+ MB/s on a HP MSA P2000 using iSCSI 1 Gbit multipathing. Thanks a lot.

Awesome! thanks for the feedback!

Pingback: Изменение политики выбора пути (Path Selection Policy) по умолчанию для дисковых массивов определённого производителя в VMware ESXi | Self Engineering