One thing that I had problems with the first time I installed Veeam was the ability to backup Virtual Machines directly from the SAN. Meaning that instead of proxying the data through an ESXi host, the data would flow from SAN to backup server directly. The benefits of this process are very clear… reduced CPU and network load on the ever so valuable ESXi resources.

The problem is that by default this just doesn’t work with Veeam if you haven’t properly setup your backup server. I will try and keep this process simple, and vendor agnostic ( from a SAN point of view).

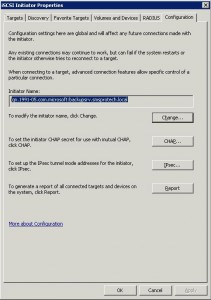

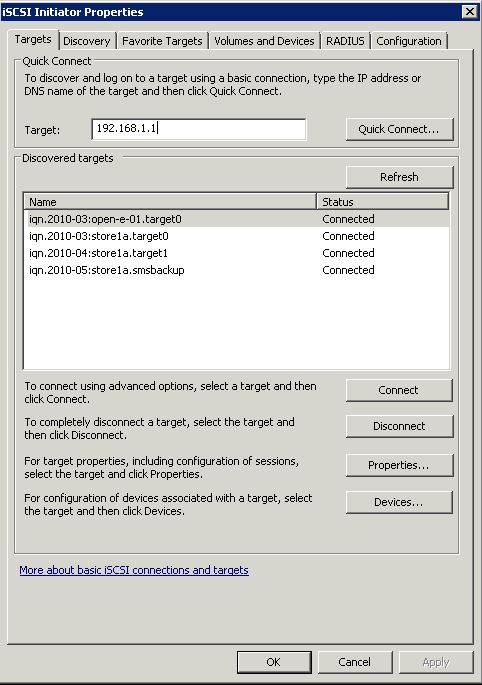

The first step to making the vStorage API “SAN backup” work is to make sure your backup server has the Microsoft iSCSI initiator installed. It is already installed by default on Windows 2008 server, however for windows 2003 server you will need to go to Microsoft to download the latest version. (Note: You will need to configure your SAN to allow the IQN address of the iSCSI initiator to have access to the volumes on the SAN… this process is different for each vendor. See screen shot on how to find this in the Configuration tab of the iscsi initiator) After installing MS iSCSI initiator, and setting up your SAN, we need to configure it to see the SAN volumes; do this by opening the “iSCSI initiator” option from control panel. At the top of the main tab there is a field where you can put your SAN’s IP address, enter that now and then press Quick Connect. Shortly a list of all of the volumes that your backup server has access to should appear, once they do select each one and press the “connect” button. Because the volumes are formated VMFS windows will not show them in My Computer, but if you go to Disk Management inside of Computer manager you should now see that the backup server can see these volumes.

The first step to making the vStorage API “SAN backup” work is to make sure your backup server has the Microsoft iSCSI initiator installed. It is already installed by default on Windows 2008 server, however for windows 2003 server you will need to go to Microsoft to download the latest version. (Note: You will need to configure your SAN to allow the IQN address of the iSCSI initiator to have access to the volumes on the SAN… this process is different for each vendor. See screen shot on how to find this in the Configuration tab of the iscsi initiator) After installing MS iSCSI initiator, and setting up your SAN, we need to configure it to see the SAN volumes; do this by opening the “iSCSI initiator” option from control panel. At the top of the main tab there is a field where you can put your SAN’s IP address, enter that now and then press Quick Connect. Shortly a list of all of the volumes that your backup server has access to should appear, once they do select each one and press the “connect” button. Because the volumes are formated VMFS windows will not show them in My Computer, but if you go to Disk Management inside of Computer manager you should now see that the backup server can see these volumes.

Update: A note from the Veeam Team “One thing that we (Veeam) recommends is to disable automount on your Windows backup server. To do this open up a command prompt and enter in diskpart. Hit enter and then type “Automount disable”. This is to ensure that the Windows server doesn’t try and format the volumes at all. However, before any of this is done if you can through your SAN software, give the Veeam Backup server Read-Only access to your VMFS volumes.”

After preforming these steps go ahead and configure Veeam to use the SAN backup option, and you should notice (especially if you have separate NICs for the SAN network) that all of your data is moving through the SAN directly to the backup server without proxying through the ESXi hosts.

![]()

One thing that we (Veeam) recommends is to disable automount on your Windows backup server. To do this open up a command prompt and enter in diskpart. Hit enter and then type “Automount disable”. This is to ensure that the Windows server doesn’t try and format the volumes at all. However, before any of this is done if you can through your SAN software, give the Veeam Backup server Read-Only access to your VMFS volumes.

“but if you go to Disk Management inside of Computer manager you should now see that the backup server can see these volumes”

Do the volumes need to be initialized?

NO! do not do ANYTHING to the disks inside of computer management, if you try and mount them in windows you will be asked to format them….. THIS WILL DELETE ALL of the on that LUN… very bad! Veeam just needs them “Logged On” inside of the iSCSI initiator. After you log them on that is all you need to do.

This information is (glaringly?) missing from the Veeam documentation. I muddled my way through, but your post here is confirmation that I did everything correctly. I concur that the Veeam Backup server should have only Read access to the VMFS volumes, that prevents all issues with accidental deletion/formatting/etc. You can also corrupt volumes when more than one server access them for write simultaneously, so Read-Only is your best bet.

I will implement the diskpart tweak for disabling automount, as I did not see that in the Veeam documentation either.

Thanks for an informative post!!

Justin,

Thanks for the great information. For most of my jobs I almost tripled my through put as opposed through the network.

Thanks again,

Adrian

I am using Equallogic, but I do not see anywhere under Access that I can set a volume to ReadOnly for a specific initiator. (ie, Windows Host)

Does this require to have VCB installed?

No, VCB is the predecessor to the vStorage API method. The new method no longer requires a proxy machine.

Couple of updates from Veeam Teeam 🙂

1. Veeam Backup & Replication 5.0 or later setup program automatically disables automount, so you do not have to worry about doing this manually.

2. Giving Veeam Backup server read-only access to VMFS LUN is not really mandatory. We found that many SAN storage devices do not even provide this capability today (think Dell Equallogic), but it seems that many customers tend to think this is a mandatory requirement for direct SAN backup. So wanted to be very clear that this is NOT a requirement, just extra level of protection from user error (accidentally initializing those disks). Just learn no to do that, and remove all other users from Local Administators group on your Veeam Backup server to make sure someone else does not do this accidentally.

Thanks for the blog post!

Thanks for the update Anton!

Tts not just Veam that has this poorly documented, other backup products are similar.

I would not adocate using shared LUNs at all even if VEAM automatically sets the disk automount off.

WHY:

I tested to see if the disabling autmount would remain off after MS Windows patching or updating with a maintenance pack.

After MS Windows patchiing it was found that the automount setting reverted back to defailt value i.e automount was ENABLED.

I would assume at the reboot after patching the the VMware backup server the LUNs would be intialised by autmount and you have lost all your VMware LUN data.

I agree that there could easily be data loss, however if the Veeam backup server is configured to only have Read access to the LUN’s there is no chance of data loss. I did not include that in the review because actually making a server only have read access to a LUN is a very different process depending on the SAN vendor and the medium being used (FC or iscsi for example)

I am very thankful to this topic because it really gives great information ,’*

Have to agree with Jack. Dell needs to get a read-only option on the access list. Currently you can only set an entire volume to read-only.

Hi all,

I have followed all of the above and the througput has not changed at all comparing to the over the network.

I can see the bakcup using the correct NIC which is directly connected to the SAN network, 1Gb NIC and SAN is configured but all i see in the Veeam backup is

Process rate = 52 MB/s

I thought i would see something near 1Gb

is this throughput normal?

Hi all,

I have followed all of the above and the througput has not changed at all comparing to the over the network.

I can see the bakcup using the correct NIC which is directly connected to the SAN network, 1Gb NIC and SAN is configured but all i see in the Veeam backup is

Process rate = 52 MB/s

I thought i would see something near 1Gb

is this throughput normal?

Nile,

For the first pass… before change block tracking is enabled that is about right depending on your CPU speed.

After change block tracking is enabled then you will see speeds of 100+ MB/s Ive seen as high as 3GB/s on a setup i did yesterday.

HI,

I have a question about noautomount

I have read this from the above comments

“1. Veeam Backup & Replication 5.0 or later setup program automatically disables automount, so you do not have to worry about doing this manually.”

1. does this mean that the regkey noautomount will be set to 1 when veem 5 is installed?

2. if so then I have problem with bakcuin up the Server State fo the veeam backup server. unfortunately to backup the Server State then you need to mount the MSR partiton and that is done by makeing sure the noautomount is set to its default setting that is 0 (enabled).

so my questoin is how to backup the server State when the veeam disables the noautomount?

thanks

Just wanted to reply to my own post as this is really important topic and should be followed to the letter.

The automaunt key must be disabled as stated in this blog otherwise it may re-signature the VMFS volums. This is just a normal behaviour of windows when it sees new volumes it try to re-signature it.

Unfortunately this means that we cannot backuup the system state of the veeam server. it is just one of these issues that might be fixed in new windows versions.

thanks

Veeam 5 does in fact disable automount by default.

Normally to backup a Veeam server (which we usually make a separate physical box to take advantage of the Instant Recovery feature) we would use Symantec or Arcserve or even Windows backup. And would offload it to tape directly.

Veeam cannot back itself up, its an egg before chicken sort of thing. As for teh system state i dont really know that it is needed… if your Veeam server does crash the absolutely worst thing that could happen is you have to start a new backup chain… which in most cases isn’t the end of the world.

Hello,

In my environment, I have Veeam installed on a VM and I have done this practice, but still I don’t see better performance in backing up a VM or restoring a VM. Still the speed at 10 ~ 15 MB/s.

What could be the other issues to speed up my backup?

Thanks,

I started getting an issue now where the Veeam backup server (the whole PC), was performing so badly it was unusable.

I removed the connections to SAN and now it seems fine (tried everything else first though),

any suggestions why this might occur?

thanks Justin for the informative Post. Thanks also goes to the commenters that provided further info!

Found it very useful.

Hi,there

Two question,

if the Veeam backup server is configured to only have Read access to the LUN’s, how snapshots will be created for backup process?

Second point, will i have to change the property access in case of restoring a VM (read only to read/write)? or will veeam pass trough esx (and lan) in this case?

thks for the posts

When you install Veeam you tell it about your vCenter server and when you go to do a backup Veeam tells vCenter to take a snapshot… then Veeam figures out which files it needs to backup and gets them from the SAN (vCenter tells Veeam which LUN and which blocks). That is why Read only is sufficient.

You are correct on the restore process, because Veeam can only read RAW blocks from a LUN it must proxy the data back through vSphere or ESX and let ESX white it in VMFS format to the LUN.

Justin, thanks for this great article.

Like mentioned above in other posts also our EMC SAN (Clariion CX4-120) also has no possibillity to set a LUN (via iSCSI) to Read Only.

I am actually doing some perfomance tests. But if i look at the security risk (deleting LUNs via Windows) vs. performance benifits I think i will turn back to the virtual appliance mode.

In first tests the virtual appliance mode doesnt seems to be much slower, but my Veeam Installation is also running as a VM inside of my vSphere infrasturcture (so all the trafic is keept inside our 10GbE fabric).

Wouldn’t having Veeam as virtual appliance be in direct contradiction of a disaster recovery setup?

How does one get VM back to actually restore rest of the VMs?

sebus

Veeam stores all of its meta data with the backup files. as long as you are protecting those backup files with offsite replication or at least to media that is outside of your san then you can quickly spin up a windows server and import the veeam backups into the new veeam server and restore from that … this process does not take much time at all

I must be totally missing something but I don’t know what. I have a Windows 2k8 R2 server with 2 NICs and a lot of local storage. One NIC is a 1G on our 10.139.x.x net which all servers/PC/s/etc access. The other is a 10G NIC on a 10.140.x.x net which storage and the ESX Servers, and the Veeam box are on. (The ESX’s, Veeam, and 2k8 box are also on the 10.139 net) Since the 2k8 Server and the ESXs are on the 10G backbone and the source VM is on ESX, the destination for the Veeam backup is on the 2k8 Server, I am looking for the fastest avenue of transport from source to target. I thought that if I installed MS iSCSI Target software on the 2k8 box and created an iSCSI target from the local storage on it and then configured Veeam with iSCSI initiator software to access that target, it would improve throughput. On the Veeam box I have added the iSCSI target ( the 2k8 box). Now I can see the Target under Storage manager on the Veeam box but cannot figure out how to add it as a repository so that I can backup to it. Maybe I am going about this all wrong but there is probably somebody else out there as clueless as me that needs help. What would be the best way to take advantage of this 10G backbone to backup from ESX to the 2k8 box? Simply adding the 2k8 box as a Windows drive letter to the Veeam box only gives me about 39M throughput. I know I can get faster than that. Thanks for tolerating my newbieness

Tony,

You need to make sure that your veeam backup server has read access to all of the iscsi luns inside of vmware. At that point you tell veeam to backup the VM’s and it should use SAN transport mode… if it is using NDB mode then you are bound to get really bad transfer rates.

Let me know if you still cant get it, maybe we can do a webex or something

Justin,

Thanks very much for this informative article. The additional comments by others are also much appreciated.

Regards.

Mike

Hi- I am backing up VMs which reside on a large number of iSCSI LUNs. I am testing this method. Is there a way to connect multiple targets at once using the MS iSCSI initiator? Better yet, is there a way to export the list of favorites from one backup proxy to another?

not sure. I doubt it thought. Your best bet would probably be to use powershell to see if you can add them that way.

Hi J

I think I’m going nuts here. I followed your article (nice one) on setting up the MS iSCSI software initiator but every time I start a backup job it tells me “Direct SAN connection is not available, failing over to network mode” and I get around 30-37MB/s in NBD mode.

The backup server is a Win2008R2 physical box with Veeam B&R 7 with all “roles”

2x Integrated BCM5708 nics, one on production LAN and one for the SAN network.

Created MS iSCSI initiator as per your article. It connects to a Equallogic box holding the VMFS volumes. I’m able to see the VMFS volumes on the Windows Disk Management as offline.

What else do I need to check? I also have configured jumbo frames on the iSCSI nic since the switches are aswell. Also flow control is enabled on both iSCSI nic and SAN switches.

Offload mechanisms on nic are enabled and MCS policy in initiator settings are set to Round Robin.

I hope you have some suggestion

Thanks

i would just give the veeam team a call they are usually pretty helpful. it sounds like you have things setup right

hate to drag up an old thread but just wondering if anything has changed for vers8 or is it ok to just set this up?

also is there any point to doing this on veeam proxy VM’s or is it better performance having it setup on the physical Veeam box?

Merci

Hey Eddie.

V8 could present some differences in best practices on things depending on your situation.

1.) if you have a dedupe appliance then you certainly want to use Veeam’s integration with it

2.) if you have a data domain DDBoost is a MUST! you are just silly if you dont use DDBoost

3.) while physical proxies with direct san access are still considered the “best” way to do backups, I honestly prefer virtual proxies using hot add these days.

The biggest thing with physical proxies is that VMware is required to do a lot less work…. meaning that all it has to do with direct san backups is take a snap

If you use virtual proxies then after the snap it also has to reconfigure the veeam proxy VM to have access to the vmdk. which basically means … more stuff for vcenter to slow down during the process lol but personally i havent had any issues with it and it keeps customers from having to run physical boxes.

Pingback: Veeam direct from SAN – pio.nz

Pingback: Introducing a New Sponsor, Veeam! | Justin's IT Blog

Pingback: Veeam Direct Attached Backups with HP P2000 SAS | Justin's IT Blog

Pingback: Zerto: The Missing Manual | Justin's IT Blog