It looks like there is a new member of the VNXe family, a block only version of the VNXe3200. There are also some hardware differences too:

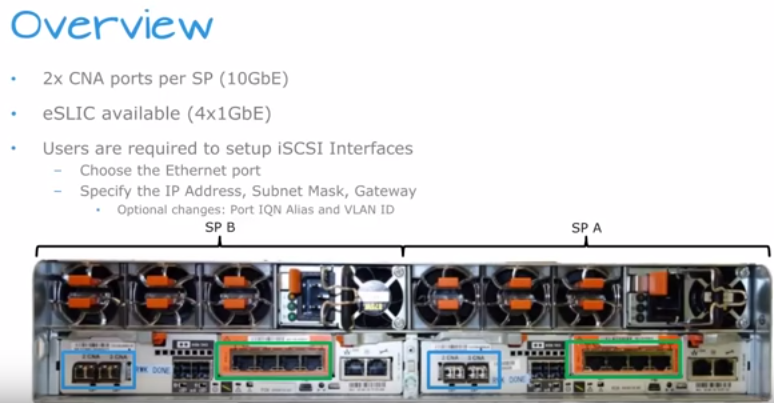

VNXe3200 has 4 copper RJ-45 ports (per SP) that can be 10Gb iSCSI or 10Gb File (NFS / SMB); VNX1600 has two CNA’s (10Gb iSCSI / 4/8/16Gb Fiber Channel) per SP. If you want 1Gbps Ethernet then you would need to purchase the 4 port eSLIC shown below in green.

Here is some more detailed information on the VNXe1600 via EMC marketing material:

My take

I think this will make a great addition the line up, while the VNXe3200 was cheap… it wasnt cheap enough in some cases. In fact I was doing some research for a small company a couple of weeks ago where the VNXe3200 would just barely fit in their budget, the VNXe1600 will be perfect, some sites are saying usable configurations as low as 10k.

Right now with a VNXe3200 with just a couple drives and some fast cache you would be around 20k… so if all you need are the block features to hook to your VMware cluster than dropping that by half will be pretty awesome. Up until now that has been an area that only Dell and HP have played in with their MD and MSA lines.

Unfortunately with my moving onto the vendor side, and not working for a reseller anymore it may be some time before I get to check one of these out first hand.

![]()

Good to know. I’m trying to squirrel enough money to build out a lab and would really like a VNXe. The wife always seems to have a better use for the funds!

yeah i know how that goes 🙂 You can find VNXe3100’s pretty cheap these days if you look hard enough. A lot of them are now 3 years old and will be hitting the secondary market soon. VNXe3200/1600 is a much nicer platform, but for lab 3100 still works great.

The things I see that are different between the 1600 and 3200 is that the 1600 does not have FAST tiering and it has a lot less cache per SP. For most use cases that shouldn’t be a problem.

If it is block only it would not support NFS/SMB? those protocols are file.

I’m confused. If it is block only why does it support NFS/SMB?

it doesnt support those. If you re-read where i mentioned those I said that the VNXe3200 supported those… not the 1600

Gotcha, so they went back to a FLARE only OS, classic!

maybe … they could also have the same os on it… and just not be enabling things. which would make the most sense so that when customer XYZ outgrows the 1600 they can do an easy upgrade to a 3200 ??? just guessing

Pingback: EMC VNXe1600 - Configuring Hosts and LUNs - Justin's IT Blog | Justin's IT Blog

Curious to know if any testing has been done regarding the practical performance implications of using the Vault (aka “system”) drives in a storage pool versus not doing so?

Especially in the SMB world that this SAN is designed for, giving up those first 4 drives solely for the OE is borderline laughable, so they tend to always get lumped in with the presentable storage in a pool; however, when doing so, Unisphere warns about the performance and capacity impact that will result.

Any thoughts?

Im using the vault drives in the system i installed … no performance impacts seen

You have to remember that on the VNXe line of systems, they are not used for dumping data to if there were a power loss. VNXe systems have an onboard SSD for that purpose. So they aren’t your grandpa’s vault drives.

Pretty much every VNXe system that I have installed has utilizedt he vault drives without any complaints.

I’ve always used the “vault” drives on the VNXe, and never run into issues either. At the price points they’re usually going into, the customer can’t afford to give up that space.

Thanks for the input, guys!

So performance isn’t much of a factor when considering to use those system drives; however, in working with pre-sales (distribution) on a number of these, what I notice is that inclusion of them in a usable pool should depend on the capacity of the drives chosen for the array.

If you’re populating with 600GB drives or larger, the drives are generally always considered available for pool usage. If you’re populating with 300GB drives, though, those drives are written off and not used. The reason is that so much of the drive is consumed by the system (I couldn’t get an exact number, and can’t find it on a datasheet, but I heard it was in the 200+ GB range) , there isn’t any point in trying to include them for presentable storage.

Did you know what is the maximum initiators or host in a VNXe 1600?

I found it… is 256 initiators per SP

SOrry for the delay… I was pretty busy this week

Where did you find the maximum initiators/hosts in a VNXe1600?

Not sure I have ever looked for the maximum…. mainly because the one I have installed only has two hosts attached to it… i would just look for the spex sheet on the EMC website though.

Hello there Guys

I have got couple of VNXE DAE with product number of VNX16K-DAE-25. can I install them for my own VNX5200, I know that the correct part number is VNXB6GSDAE25F . I need to know while both VNXB6GSDAE25F and VNX16K-DAE-25 have the same hardware specs, will the work as the same ?

so will VNX16K-DAE-25 work on VNX5200 DPE

Thank you

Your best bet would be to find the EMC interop. matrix … honestly i would bet that it would work… because like yous aid the hardware is the same… what I cannot guarantee is if the firmware is different.

Worst case, if you plug it in and it doesnt work, is that the VNX will report a fault on that shelf. If you can take some down time, then all you need to do to “clear” the error is to shut the VNX down and disconnect the shelf and power it back up… VNX will be back to normal after that.

I heard of a situation where a tech attached some Data domain shelves to a VNX and VNX shelves to a data domain once…. it worked until a firmware upgrade happened and the shelves refused to come up (on the VNX) … so just an FYI