First a quick background for those unfamiliar with the P4000 line. HP bought a small company called Lefthand, which made a software product called SANiQ, this product could be installed on a server with lots of storage and then the box magically becomes an iSCSI SAN. Big deal you say?… Well what makes the Lefthand (now called HP P4000) so unique is its modular design in which nodes can be grouped together to form clusters for fault tolerance and increased capacity.

So basically this post is a walk through of what you need to do in order to remove a node from a cluster and how to add new nodes to the cluster. This process is important if you are:

- Retiring old nodes

- adding storage capacity

- migrating from SATA nodes to SAS nodes

- any other reason that you might want to add or remove a node 🙂

Disclaimer –

Because this procedure is fairly important in terms of getting everything right to avoid data loss or corruption I want to add an extra disclaimer in addition to the one on the about page.

This post in no way guarantees complete information, while I do my best to make sure all angles are covered… It is only meant to be a quick overview of how the procedure works. Before you do anything to equipment that could cost you your job, you should visit HP.com and get the official P4000 manuals and make sure you know the entire process, or call HP support. I take no responsibility for your actions on your hardware.

Getting started

To get started I will assume that you have your SAN up and running and all new nodes have been given an IP address and are reachable from the “old” SAN nodes. Please note that this process will move data to the new nodes so you will want to make sure that you have Gigabit ethernet between all nodes for this process. Also I would make sure that your SAN is stable for this procedure, only the HP gods know where your data will be if a source or destination node goes offline during this process.

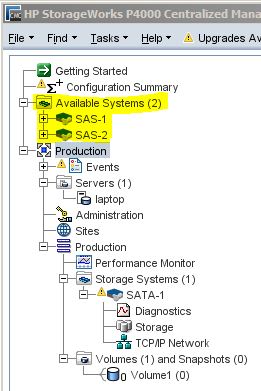

The first thing to do is to “find” your new nodes. To do this you can enter their IP addresses into the “Find Systems” wizard. After your nodes have been found they will show up in the tree on the left. At this point we have our nodes online and the other systems should be able to communicate with them.

The first thing to do is to “find” your new nodes. To do this you can enter their IP addresses into the “Find Systems” wizard. After your nodes have been found they will show up in the tree on the left. At this point we have our nodes online and the other systems should be able to communicate with them.

The second step is to add the available systems to the Management Group in which they will be used. To do this right click the Management Group they need to go in (in this example we are going to add node SAS-1 and SAS-2 to the “Production” Management Group). After right clicking on the management group select “Add Systems to Management Group”. A wizard will appear and ask you to select which nodes to add, select both of the new nodes and click “continue”.

Now the nodes will move from the “Available systems” area into the “Production” Management Group. They are not however part of the “Production Cluster” yet.

Here is what it should look like when you have the nodes added to the Management Group.

The next procedures will vary depending on what exactly you are doing. You are only adding nodes to an existing group then you can skip the part where we remove the SATA-1 system from the cluster. If, however, you are replacing a node or migrating from one node(s) to new ones then you can remove them as this article shows.

Adding Nodes to the Cluster

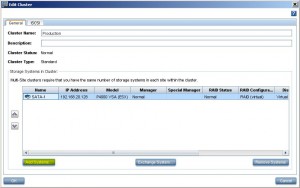

To add nodes to the cluster right click on the cluster and select “Edit Cluster”. A box will appear with a list of nodes currently in the cluster, on this screen click “Add Systems”.

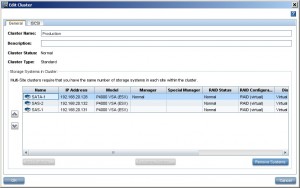

Next, a box will appear where you can select both nodes to add. After selecting the nodes, click “O.K.”. Now your list of systems should look like this:

Next, a box will appear where you can select both nodes to add. After selecting the nodes, click “O.K.”. Now your list of systems should look like this:

As you can see in the previous screenshot I have the SATA-1 node highlighted. If you are only adding nodes DO NOT DO THIS NEXT STEP. If you are getting rid of the existing nodes then highlight the old system(s) and click “Remove Systems”. Then click “O.K.”

As you can see in the previous screenshot I have the SATA-1 node highlighted. If you are only adding nodes DO NOT DO THIS NEXT STEP. If you are getting rid of the existing nodes then highlight the old system(s) and click “Remove Systems”. Then click “O.K.”

What happens now?

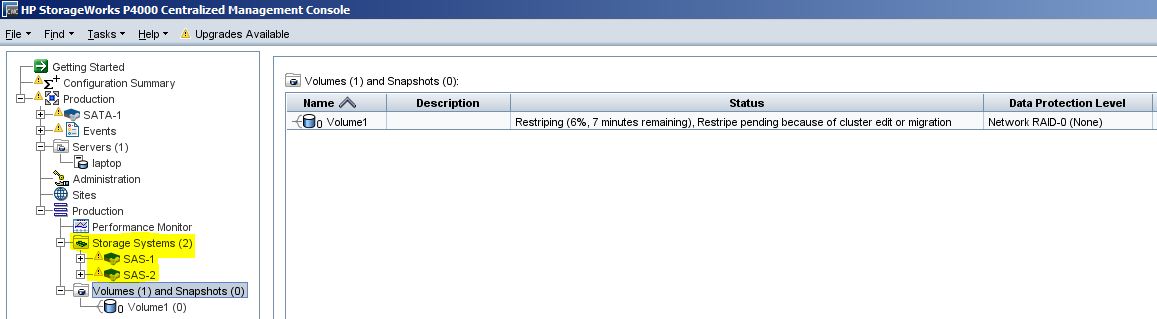

Basically, the management software on the SAN will figure out that we are adding new nodes and in this case, it also knows that we are removing a node. So it knows to move the data off the node(s) that are getting decommissioned and re-stripe the data across the new nodes. This process will take a long time depending on the amount of data you have on your volumes. The best place to monitor progress is on the “Volumes and Snapshots” section. See the screenshot, but basically, this tab will show you the re-stripe progress of each volume and each snapshot.

As you can also see in this screenshot besides the Restriping status is the list of Storage Systems on the left. We can now see that we are using SAS-1 and SAS-2 for this cluster. and the SATA-1 node which was the only node in our cluster is now in the management group … but not listed as an available system (at least yet). After ALL volumes and snapshots are restriped we can safely shutdown the old node.

As you can also see in this screenshot besides the Restriping status is the list of Storage Systems on the left. We can now see that we are using SAS-1 and SAS-2 for this cluster. and the SATA-1 node which was the only node in our cluster is now in the management group … but not listed as an available system (at least yet). After ALL volumes and snapshots are restriped we can safely shutdown the old node.

That is pretty much it, its a fairly straightforward process. I would, however, make sure all your stuff is backed up OFF OF THE SAN, and offline like a tape or separate backup server.

Side Note

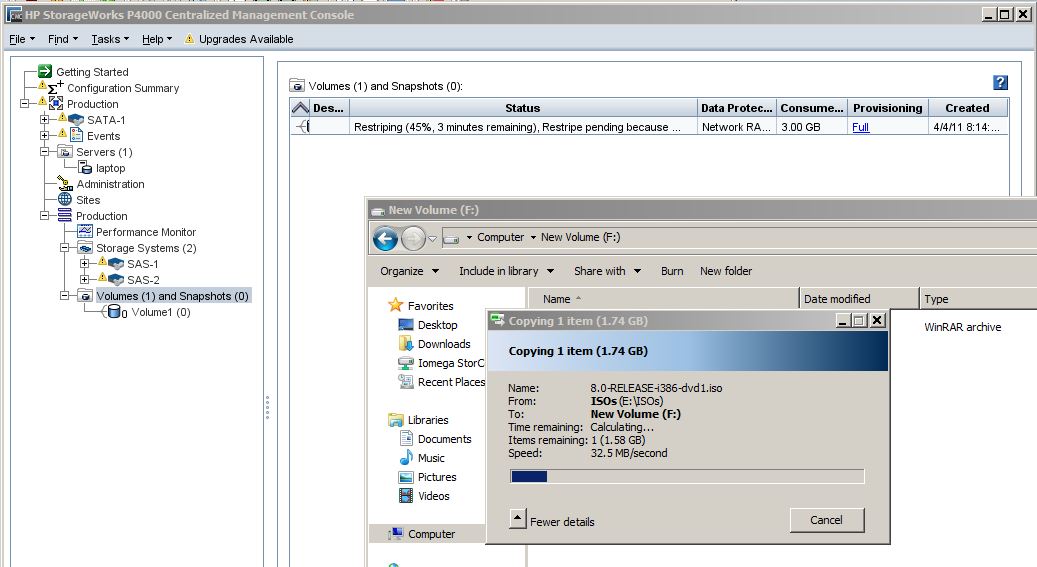

As a side note, I thought I should mention that even during this migration process all of you data will be online and available. The screenshot below was taken while my SATA node restriped data to the 2 SAS nodes, and during that time I was able to copy data to the Volume.

![]()

One trick that i used in a test environment was to treat the new node as a repair of one of old node: this do not require 2 restripe but trigger only one resync.

The only culprit is that you have to simulae a failure, but plugging the network cable was enough.

LOL, that’s a little more risky in my opinion. What if you were to lose a couple drives in the other node while rebuilding?

Can you continue to create or remove volumes while the restrip is in progress?

Yes, everything is just slower during a restrip. You can adjust how fast a restrip happens though too, It just depends if you want it don’t fast with lots of slowness, or if you want it to take a long time with minimal impact on other things.

Justin – thanks, this was REALLY helpful

Simon / Adelaide – Oz

No problem! Glad the post helped.

Hi Justin, I’m going to add 2 nodes to my cluster (actually have 2 p4300 g2), there’s going to be 4 nodes in total, how long the restripe procees take? We got a dual port 10Gb nic card. 2.7 TB of data. Can I do it in production time?

Thanks

Regards

it will restrip in the background, performance will be degraded a little but you certainly could do it during production as long as your willing to deal with the performance slow down.

Artur / Justin

Word of caution – I use remote copies between two clusters (one at Head Office and one at DR). I also use VMWare’s SRM that sits on top of lefthand remote copies.

Last time I added a node, I had all sorts of probs and hindsight taught me I should have temporarily suspended remote copies during the restriping process.

If you or anyone else are interested in teh details drop me a line.

Justin – I’m so glad I subscribed to this blog – it is really helpful

Simon T – Adelaide – Oz (just starting to come out of a bl00dy cold winter – yay!)

Thanks for you reply Justin / Simon, I’m a little worried about how long the performance slow down, the bandwidth is set to 16 MBs (Default), Do you think this is going to affect the work of the users significatively?

My plan is to do it on saturday and sunday but if this process take more time, I would like to know the impact on production time.

Regards

Artur

My situation was a tad different from you in that I had TWO separate clusters – one at each site – so adding a 5th node at each site was running at 1 GBit speed.

As I understand it – you have a single cluster spanning a WAN – two nodes at present and are about to go to 4 (2 at each site).

[Justin (and others), please correct me if I’m wrong] but a few thoughts for ya:

1: Quorum:

Single cluster with 4 nodes (2 at each site) is a nightmare.

To mitigate the nightmare, ensure you install the VM (Virtual Manager) NOW – but leave it disabled (as I recall, it’s horrendous to do this once disater strikes!).

Next issue though is where do you place the (downed) VM?….

If you place the VM at primary site and link to secondary fails – then primary will continue (you’d have to manually start up the VM at primary to get quorum of 3). However, if primary site fails, you are screwed – DR site only has two nodes and, as far as DR nodes are concerned, the VM resides at Primary site and can’t be started at DR. DR will not work with 2 nodes as quorum is 3. Gotcha.

If you place the VM at DR (ie Disaster Recovery or secondard site) then, if Primary site fails, at least DR will survive (once you crank up the VM at DR). Of course, in this scenario, if the link to DR goes down…. your Primary SAN goes offline! Gotcha.

My advice; Have the VM at DR (powered down). So, if disaster hits, DR can continue. if you PLAN to down the link, beforehand you can always move the VM to Primary (and put it back afterwards!). The only downside here is if the link fails unexpectedly, Primary goes off te air until link comes back on line. You won’t loose data – SAN nodes just refuse to mount the SAN to prevent split brain.

2: You might wanna consider adding nodes into the cluster at primary site and getting them to restripe at LAN speeds and THEN shipping them to DR site (changing their IP addresses and site configs before they ship out)

I hope this makes sense. Happy to continue batting this back/fwds on the forum so others can see/comment/benefit and/or if you wanna contact me direct – pl feel free – [email protected].

Happy to try and help you not go thru the sheer f**king pain I did when I was in your situation. (Thankfully now I have 5 nodes at each site – separate clusters – so quorum cr4p no longer bugs me)

Cheers – Simon / Adelade – Oz

ps – to answer your question about user impact

depends – in my case – i mostly used the SAN for VMware VMs

user impact wasn’t that bad as I reckon most of the VM (virtual machines not virtual manager as per my prev msg!) were all running cached in ESX host ram….

Mind you, rebooting a VM might have been painful pulling the vmdk off a restriping disk…..

btw – ensure you disable any anti virus scanning on migration weekend – likewise, if you image VMs to the SAN (i use shadow protect), don’t forget to temporarily disable those during the restripe

I’m so sorry about my 16MBs bandwith explanation, I was talking about the management group bandwith. My two sites are in the same subnet running at 10 Gbs FCoE x 2. The p4300 are in raid 10 (2 way mirroring).

A 10 Gbs WAN….! You lucky ba5tard 🙂 Obviously not stuck in an outback like Australia then are you? 🙂 (- heck, I don’t even have that between my core switches at my Primary site!)

That being the case, with Network RAID10 on, what – nodes of (8 x 420Gb disks) – I’d say a weekend would be ample time (so long as you disable a/v scanning, remote copies etc first)

But my dire warnings about quorum and the placement of the VM (virtual manager) remain!

(**wanders away muttering and grumbling about how lucky some techos are to have 10 Gbs WAN **)

🙂

Thanks for the reply, 🙂

The 10 Gbs is over the LAN, two sites interconnected with Fiber. That’s why we got 10 Gbs.

Regards

Anyone having slow restriping after a node maintenance activity ? I’ve been chsing my tail thinking I had som sort of network performance issue, but after a bunch fo testing it seems my pair of P4000 VSA’s simply don;t want to restripe faster than about 8.5 MB/s irrispective of the “local bandwidth priority” setting.

I want these guys to restripe quckly so I can continue with increasing the size of he virtual disks (remember you can only have 5, and I made the buggers too small). so have to increase them one by one and endure a complete bloody system restripe each time.

yes… I’m an Aussie too, but living in Singapore.

cloud just create a new cluster with the right size disks and transfer the data from one VSA cluster to the other ? Other than that its hard to say without knowing what type of hardware and network setup you have.

Also have you tested how quickly data can be dumped to a LUN when its not degraded ? (what is your baseline performance number)

Question: I have a 6 Node Cluster setup in a Multi-Site Config (Just seperate Buildings) and I need to replace all 6 Nodes. My plan is to add all 6 new Nodes to the Management Group and to the respective Site. Then I would add the 3 new Nodes in Site-A and remove the 3 old Nodes from Site-A. Restriping the 3 Nodes would most likely take 24 Hours with 10G. When all is synced up, I would then do the same process with Site-B. Basically, replace the nodes one site at a time.

Am I good with that?

its been years since i have done this, but iirc that was the process.