Ingredients:

1 – VMware Software Package

1 or 2 – Gigabit Network Switch

2 or 3 – 64-bit Capable Server

1 – SAN

Directions:

- Load servers with VMware ESXi, configure vswitches

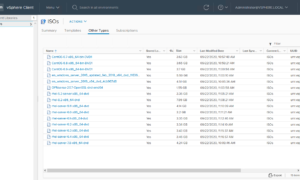

- Configure SAN, add SAN to ESXi servers and format datastores

- Install Vcenter server on a VM and configure

- P2V old, tired, physical servers

- Save company thousands in downtime and new server acquisitions

I consider an SMB a company that has anywhere from 2 – 10 servers. SMB’s have to be very conscious of what they spend their money on, but at the same time they need systems that are just as reliable as an enterprise. This is where VMware’s SMB packages combined with a cost effective SAN can provide exactly what SMB’s need to keep their systems up while making sure the company’s expenses don’t exceed profits.

The problem is that to build a reliable solution there are many parts and pieces … these all add up, and when the I.T. department shows a complete solution quote to the CFO there can be a little sticker shock. Most of the time though, after some explanation and a ROI assessment the numbers make sense to the accounting department. However, sometimes it’s just not in the budget to spend $100,000 dollars on a new infrastructure. That is where this article comes in, first I will lay out the complete solution this will describe what we want to have running our business when all is said and done. After that I will explain how to implement it in stages so that we get what we want, but we only buy it as we have the budget.

The complete solution

So basically we want a solution that never goes down and serves all the I.T. needs … obviously that is a big generalization, but at the same time it is what businesses want. The downside is that reliability and redundancy cost money, and to make sure that things are redundant lets talk about where we want to end up. (Note, the cluster that will be priced out below is capable of running much more then 10 VM’s depending on workload) Servers should always come with redundant power supplies, and enough network ports to make sure that all vSwitches get a redundant pair. In order to continue the redundancy we will need redundant switches to plug those servers into, and finally we also need redundant storage, either a SAN or an NFS server. So what about software right ? Well for SMB’s VMware has two packages designed for you. I would always recommend VMware Essentials Plus because it now comes with vMotion and also the ability to use VMware Data Recovery. With the SMB packages we are allowed up to six physical cpu’s with six cores in each one. So to maximize our investment I would recommend getting 6 core CPU’s which will provide the most bang for your buck with this VMware package.

Ok so, here is the list of stuff we need for a complete solution:

- 3 HP DL360 G7 Servers (about $26,000 MRSP)

- 2 Cisco 3750G 24 Port Gigabit Switches with SmartNet (about $18,000 MRSP) (May want to go with the 3750X series depending on price)

- HP MSA2000i (or EMC Clariion, or whatever) (about $20,000 depending on configuration)

- Liebert UPS with Micropod (about $5,000 MSRP)

- If you dont purchase a micropod you will need to purchase Two UPS’s that can carry the full load, as the Micropod will allow you to take the UPS out of service without shutting down the workload.

- Knurr Rack (about $2000)

- Network Cables (about $500…seriously)

- VMware Essentials Plus w/3 Years 24×7 Support (about $5,800)

- Microsoft Datacenter Licensing (about $15,000 for 6 processors)

Total Project Hardware and Software cost: about $93,000

So lets just say $100,000 by the time you get tax and stuff on there. So quite a bit for an SMB to swallow at one time, but there are ways to make it much more appealing. Lets do a yearly approach.

Year 1:

Purchase:

- VMware Licensing Bundle

- 1 – Cisco 3750G Switch

- 1 – Liebert GXT3-1500 UPS with Micropod (or GXT3-2000)

- Knurr Rack

- Required Network Cables

- 1 – HP DL360 G7 Server with 6 – 146GB Hard Drives

- 6 drives will eventually be split up with 2 in each server

Year 1 total investment: $28,500

Thats not such a bad number right? Much nicer then $100,000… So basically what we are doing is building the infrastructure that all of the IT hardware will sit in, plus starting us off with one server with about 500GB of local storage and a single switch. Right now since we only have one server we don’t have to worry about Microsoft licensing because our VM’s have no other place to go, so our current licensing can be moved over. We also don’t need a SAN at this point because again… only one server. We only need about a third of the network cables that we budgeted for, and we only need 1 of the two UPS’s that we budgeted for. This configuration is still not going to guarantee 99.999% up-time, but it will be just as reliable as any other brand new server that you would deploy. For backup at this point we will use storage from an existing physical server that is sharing via CIFS and VMware Data Recovery.

Year 2:

Purchase:

- 1 HP DL360 G7 Server with no Hard Drives

- SAN, in this case MSA2000i or EMC Clariion (just something that does iSCSI is fine)

- More network Cables

- 4 MS Datacenter Licenses

Year 2 total investment: $38,000

A little more then last year… but we are buying a 20k dollar SAN, so that is going to make the number pretty steep. What this stage is going to add is the ability to start using HA, along with that all of the virtual machines will be moved to the SAN and four of the six drives in the original server will be removed. We will then install ESXi on the new server and join it to the cluster, setup HA and vMotion. At this point we will be able to lose a server and a controller in the MSA without downtime.

Year 3:

Purchase:

- Cisco 3750G 24 Port switch

- HP DL360 G7 Server (or whatever is available and has CPU compatibility with Nehalem)

- Liebert GXT3-1500 UPS with Micropod

- 2 MS Datacenter licenses

- Rest of the Network Cables (should need 9 more)

Year 3 total investment: $25,000 (approximately)

To finish up our project we will purchase the rest of the gear to make the network redundant, build in more CPU and memory capacity, as well as battery backup time. What will happen this year is pretty easy to implement. Basically we will be loading up ESXi on the third server, joining it to the cluster, and installing the Cisco switch in the stack then moving one of the two ports in each port group to the second switch after it is properly configured. Overall a pretty painless process which shouldnt require any downtime.

Other Suggestions:

After we have this infrastructure in place some other things that we could look at include a backup generator so that we can run indefinitely during a power failure. Also now that our first server is four years old (assuming your reading this part for the next budget year) we should start to look at replacing the first server that we implemented. Remember though, Essentials Plus only allows us 6 cores per socket so 4 years from now when 64 core CPU’s are all the rage we will want to make sure we are not buying something that we cannot leverage because of licensing. Obviously something that should also be budgeted for every year are the maintenance costs on the hardware that we have… some people like to say that virtualization is putting all your eggs in one basket, while I do not totally agree, I would say that we should make that basket stronger then a tank and keep a good warranty on it.

VMware Data Recovery is a decent backup solution but if we are doing more then 20 virtual machines I would strongly recommend something like Veeam backup to replace it as well. Veeam has many features such as replication, and file level recovery that either do not exist or are not refined in VMware Data Recovery.

Comments and suggestions are welcomed

![]()

Pingback: Tweets that mention Recipe for SMB Cluster’s | Justin's IT Blog -- Topsy.com

Hi Justin,

Like your other posts this is well thought out and would fly well for any client thinking about making the leap.

One question, with your 3 host model, any reason you are not pitching the P2000 G3 SAS MSA? you would be saving 25k straight up on 3750’s.

Cheers,

Dave

Yup I agree completely. When I wrote this article originally I had no experience with the SAS model. That is the only reason I did not use it instead of the iSCSI model. The only other reason that I can see to keep the iSCSI model in there is so that people can more easily compare it to other SAN’s as some vendors do not offer SAS connectivity.

Any situation where you would use NFS for shared storage for a smb cluster?

Sure, just depends on the use case… NFS has some advantages over iSCSI. but like anything there are some downsides too… what is your situation?

Looking to virtualize about 8 servers for a small company 2 file/print, 1 exchange, 2 DCs, 1 SharePoint, and a couple other misc support servers. Just trying to see if I could get away with using NFS as they might not be ready for the SAN purchase, If not then I guess I could look at the 1 ESXi host with local storage. I was just wondering if there was anything thing out there that had good nfs performance for vsphere

shoot me an email at [email protected]. depending on budget you might be able to setup a very stable environment … cheaper then this post points out as well… it is very dated compared to how we do it now.

Pingback: Recipe for SMB Cluster’s Version 2.0 | Justin's IT Blog

Hi Justin,

I was searching for ideas on how to set up such a system. You say this is dated now. Could we have an updated article please?

I’ve found version 2, http://jpaul.me/?p=1711

What would your take be on using a reconditioned 2000i G2 SAN or is the G3 stillthe best way to go.

THe G2 is not a bad box, we have a customer who loves theirs. Obviously the G3 will add some speed and features, but a 2000i can be upgraded in the future to a G3…. you just buy the G3 controllers and slide them in. As long as you can get an HP carepack on the used one, then I dont see any problem with doing that.

Justin, unless the business can withstand extended down time I think the single point of failure SAN solution it too high of risk. And if the business can withstand extended down time then whats the point of the SAN? Why not stick with local storage?

How is the SAN a single point of failure? It has redundant controllers …