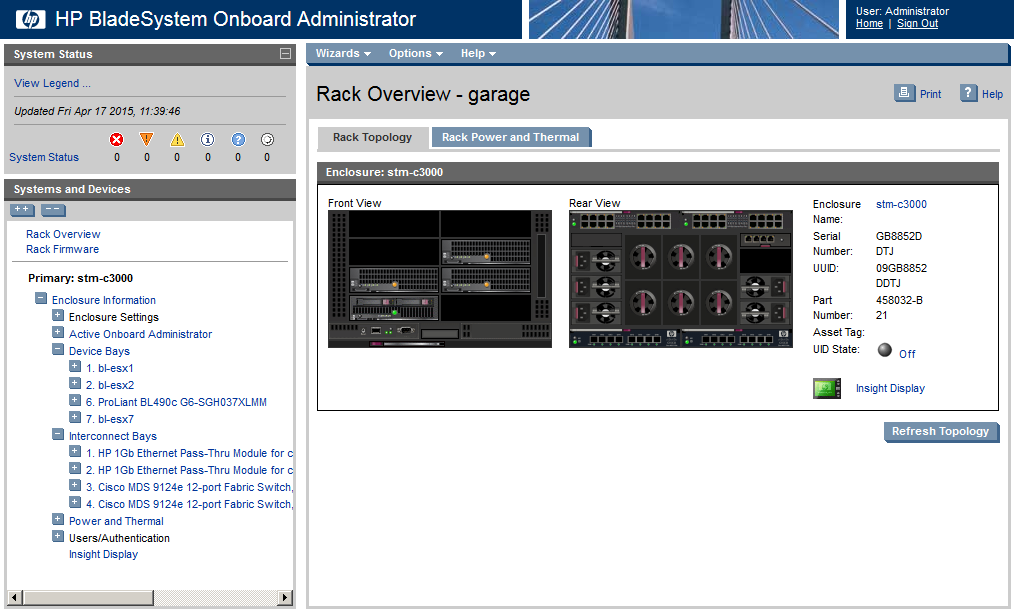

Normally during this time of year I’m preparing to shutdown my home lab for the summer time, mainly because its hot enough in the garage and because with kids I really just don’t have time to mess with it. This year however, I have been changing that up a little with a hardware refresh!

All I can say is that I’ve wanted a blade chassis for a while, It was going to be either a Cisco UCS or an HP C3000 … unfortunately UCS hardware is still a little too expensive for a home lab, and Kyle (you know who you are Kyle! … hasn’t sent me a “demo” unit yet 😉 get working on that Kyle). On the other hand though, HP blade hardware is a completely untapped resource for VMware Lab users. Why you ask? Well for one the chassis last forever… the C3000 and C7000 chassis will hold Gen 1 through Gen 9 blades… and anything Gen 6 or newer work great for VMware.

Price is also a big factor. A typical dual quad core rack mount server with ram will run you several hundred dollars on a good day… even more on a bad day. However all of the blades in my new setup were less than $100 dollars each. Some of them I picked up for less than $50 dollars because they had no ram or CPU’s (I already had both). So why the huge price different for a blade vs a rack mount with the same specs? Demand. There aren’t too many people picking up C3000’s for their home lab, and most companies who do want blades will be buying new ones. So typical economics 101 kicks in. Huge supply, low demand, means great prices if you are looking to buy.

Networking is another huge advantage. Most lab environments will have at least 1 switch for ethernet, and if you are hardcore you might also have a fiber channel switch too. I’ve wanted an Cisco MDS switch for a while as well, just to get more up to speed on NX-OS fiber channel as well as to keep my skills in use. Problem is that a 9124 can fetch over 100 bucks by itself. Luckily they also make a 9124e which is an interconnect module for the C3000/C7000 chassis. I was able to pickup 2 of them for 50$ shipped. On the ethernet side we have lots of options, we can do Cisco switches in the chassis, or pass through modules and plug directly into our existing switch. Right now I’m using pass-through modules until I can either get the Flex-10 module I picked up to work…. or until I can find a working one at a decent price…. Yes I said Flex-10, which means 10gig ethernet in the lab.

OK I know what your thinking though “That thing has to draw crazy power”…. and you are right… sorta. Total power draw while I have just the chassis on is about 400watts. That is for running ethernet pass-through modules, two 9124e fiber switches and the fans and onboard administrator. Each blade then adds about 50-100 watts to that number, where as a typical rack mount would draw 150-200 watts each, and fiber switches would draw probably 50-100 watts each too. So big picture is that for a low blade count… say 2-3 blades… its about a wash vs rack mount servers, but as i power on more blades, I’m actually saving power vs rack mounts. The only bad part about the c3000 chassis are the fans, if this damn thing had wings it could probably fly, and to do that they draw crazy power… so the biggest thing that you can do to avoid a 1000 dollar electric bill is to keep it cool and keep those fans at idle. (At idle each one only draws about 7 watts, and 90% power they are drawing about 100 watts each according to the OA.)

So my goal with this new setup are just a few things:

- Have cheap access to new servers

- Have a platform that can go to 10gig as soon as possible

- Have fiber channel connectivity

- Have good remote control capability

I think the C3000 definitely fits the bill for those objectives, and so far I’m loving the new setup.

![]()

Great writeup on your lab design. Since your using the Cisco fiber switched and/or the Flex -10 what kind of san device are you connecting the fiber to for storage or is that still being worked out? Thanks.

VNX5300 Block system is attached to the Cisco MDS modules.

Yep,

It’s official.. You have too much money . lol An EMC VNX5300 is a serious storage device.

Married… two kids… No money at all 🙂

This 5300 was destined for a dumpster after its maintenance was up. So I gave it a warm home in the garage. Otherwise I would be running something MUCH smaller

Hi – great to see you picking these for cheap.. I used to setup c7000’s at a big corp in the past but I have one question on a small setup. You think it is possible to just have a pair of firewalls and a c3000 with virtual connect switches to split up 32 IPs or do you still think you need some standalone routers/switches in between to truck the vlan to the chassis?

Im not a virtual connect expert, but as long as both sides of the wire understand 802.1q tagging you shouldnt need a router…

Hi Justin

Nice home lab, I’ve just picked up a HP C3000 as in terms of density and cost of blades in comparison to multiple R710s etc its a no brainer I think.

On the Flex-10 side, do you need the mezz cards as well for the Blade Servers for this to work? I’m assuming yes you do and in terms of the C3000 backplane, how does the networking side of things work? if everything blade is on the same subnet does it get pushed across the backplane via the passthrough switch blade (i.E it doesnt hair pin to the switch uplinked from the chassis itself)

Looking forward to playing around with it.

Thanks

Hey Martin… glad to hear im not the only crazy person out there!

You shouldn’t need any extra mez cards as long as your blades are G6 or newer as they have Flex-10 compatible dual port NIC’s onboard.

Also the flex 10 module is an L2 switch so as long as you are on the same vlan your traffic will switch locally on the flex 10 card. If it has to route, it will go north to whatever your L3 device is.

Let me know what your mileage is as you build out your lab… always open to guest posts too if you want to share with others.

Thanks for reading!

Thanks Justin for the info!

One point a friend mentioned was to check to ensure the chassis is a G2 C3000 as the G1 does not support the full bandwidth of the Flex-10? have you ever heard that? it is the first I have heard of it and I cannot find any supporting literature on the internet, my model number is the 508668-B21, so I’m hoping that point is moot either way!

Yep I got hold of G6 blades so that should be fine – thanks again!

Wow, glad I’m not the only crazy one. I have 2 c7000 blades in my garage that I am just ready to hook up. Had to have 2x 40amp 220v circuits put in to power these things. It is good to hear other people’s experience with the different I/O modules. I already have SAS2 storage arrays and am wondering if that’s the best choice or converting to fibre or iSCSI, since the c7000 will be on 10gb network. A SAS 6GB I/O module runs about $500+ on eBay, not cheap at all.

You could always run a rack mount server with a SAS card, and then hook it via 10gig iSCSI into the C7000?

So I found out the G6 blades that I have all came with fibre mezz cards along with VC-FC modules. I hooked them up to my fibre array and it works great! Since the last post, I came across 2 more c7000 with gen8 blades that were destined for salvage, don’t know why. But these all came with Infiniband mezz and switch module. So now, I am in the process of trying to build a storage array with IB that will work with what I have without spending hundreds of dollars. Have you worked with Infinibands before?

I’m working with a c3000 that my employer was tossing out. I have some blades including a bl460g7. The drives included are only 73gb. My issue is I purchased two 1TB sata drives – firecuda drives. The server says they are overheating. From what I have read this is a common issue with HPs. Most if not all third party drives apparently do not correctly report their temperature. What does everyone use for drives? Strictly HP drives?

I am running some dell sas drives in one of my blades but mostly it all external fiber channel san